Homework #4:

Dimensionality reduction II: t-SNE, UMAP, Isomap, and autoencoders

MAP573 Team

10/20/2020

Preliminaries

Information

Homework is due Sunday 11/01 23:59 in Rmd (see assignment in Moodle). There are two independent exercises. In the second exercise, you have to train an auto-encoder, a type of neural network. Depending on your computer and on the number of epochs you choose, it may take more or less time to run. In any case, it should not run for more than 5 minutes. If you have issues with your computer, please write it so that we can take it into account for the grading.

Beware: you must return your .Rmd file and a knitted .html file. Be careful, some points are attributed to clarity and care (especially when it comes to plots: you should always rename the axis, add a title if needed, choose a pleasant background theme, etc…).

Package requirements

We start by loading a couple of useful packages. Please install them using install.packages(“name_of_the_pacakge”) if not already done.

library(tidyverse) # advanced data manipulation and vizualisation

library(mnormt) # Generation of Gaussian distributions

library(Rtsne) # t-SNE

library(cluster) # Tools for clustering

library(dimRed) # Dimensionality reduction package

library(umap) # UMAP

library(reticulate) # Package to mix Python and R codeExercise 1

This exercise aims at comparing three non-linear dimensionality reduction techniques : t-SNE, Isomap, and UMAP.

1) Creation of the dataset

Let us first create an artificial dataset composed of 1500 samples generated from 3 different multivariate Gaussian distributions (500 samples each, 10 dimensions) with mean respectively mean1, mean2, mean3 and covariance cov (the same for the 3 distributions). Your dataframe must be in a tibble format with colnames X1, … , X10. You must also add a column containing the cluster label, i.e. the “number” of the distribution the sample belongs to: 1, 2 or 3. You can use the function rmnorm for the package mnormt. Name your dataset df.

#Means of the 3 Gaussian distributions

mean1 = rep(1,10)

mean2 = rep(2,10)

mean3 = rep(3,10)

#Covariance of the 3 Gaussian distributions

cov = 1.5*diag(10)

#TODO : create dataset df

?rmnormLet us separate the training data \(X\) and the true cluster labels \(y\) for the rest of the analysis:

Separate the data \(X\) and the true data labels \(y\) by running the code below:

# X = df %>% select(-cluster)

# y = df %>% select(cluster)2) First dataset plot

Using geom_density, plot the distribution of the data facetted by dimension (X1 to X10) and filled by cluster number.

Hint: you can use pivot_longer to ease the use of facet_wrap as we did in Homework 2.

#TODO : plot data distribution facetted by dimension3) t-SNE

This section aims at presenting t-SNE (t-distributed Stochastic Neighbours Embedding). We present briefly the method below but you can learn more about it by reading the original publication by Van der Maaten, Hinton et al. (2008) here. t-SNE is often used to visualize complex data in 2 dimensions. The idea of t-SNE is to embed the data on a small dimension manifold by respecting data closeness: 2 samples “closed” in the original space are “closed” in the low dimension manifold. The similarity between two samples xi and xj (in this order) in the original data space (high dimensional) stands for the probabily that xi would pick xj at its neighbor under the condition that neighbors are picked in proportion to their probability density under a Gaussian distribution centered at xi.

The variances of the assumed Gaussian distributions rely on the choice of hyperparameter perplexity (in a complex way, see article for details). You can remember that higher perplexity leads to higher variance. To simplify, one often says that the perplexity of the model is a smooth measure of the correct number of neighbors of each datapoint. It typically takes values between 30 and 50.

The similarity between the two embeddings of xi and xj in the lower dimensional manifold, named yi and yj respectively, stands for the probabily that yi would pick yj at its neighbor under the condition that neighbors are picked in proportion to their probability density under a Student t-distribution with one degree of freedom centered at xi.

t-SNE minimizes, using Gradient Descent, a symmetrized version of the Kullback-Leibler divergence between the distributions of similarity in the original data space and in the low dimensional manifold.

- Using the function Rtsne from the package Rtsne, compute a projection of the 1500 samples on a manifold of dimension 2 using default perplexity argument (30).

?RTsne## No documentation for 'RTsne' in specified packages and libraries:

## you could try '??RTsne'#TODO : your code here- Plot the 2D projections computed by t-SNE in the previous questions using geom_point. Color the points by their true cluster labels. Comment your results.

#TODO : your code hereYour comment here

- Compute the Euclidean distance matrix between all 1500 embedded points in the projected manifold rendered by t-SNE. (The distance matrix should be of dimension 1500x1500). Then, compute the averaged silhouette coefficient among all 1500 samples: it measures how close each sample is from other samples of its cluster in comparison to how far it is from samples in other clusters. The silhouette coefficient enables us to assess clustering quality.

Hint: you can use the function rdist from package fields to compute the distance matrix and the function silhouette from package cluster to compute the silhouette coefficient.

?rdist## No documentation for 'rdist' in specified packages and libraries:

## you could try '??rdist'?silhouette

#TODO : Compute distance matrix and silhouette coefficient.- Let us now try to optimize the hyperparameter perplexity. Run t-SNE and compute the averaged silhouette coefficient for various values of perplexity between 2 and 300. Plot the results.

#Plot silhouette coefficients in function of the perplexity

#TODO : Your code here- You should see that the silhouette coefficient increases with the perplexity. It is thus tempting to use the biggest perplexity possible to get the best silhouette coefficient. Why does it yet not lead to the best dimensionality reduction model? Using, the “elbow” method, propose a value for the perplexity coefficient.

Your answer here

4) Isomap

This section aims at experimenting Isomap method for dimensionality reduction. It is an extension of MDS (MultiDimensional scaling) for geodesic distance. Indeed, points are projected on a neighborhood graph with connections between each point and its K nearest neighbors (in terms of Euclidean distance). Each edge is weighted by the euclidean distance between the two nodes. The geodesic distance between two points is then computed as the sum of the weights of the edges in the shortest path linking the two points (which may be computed with Dijkstra’s algorithm for instance). Isomap then computes the eigenvectors and eigenvalues of the geodesic distance matrix. The embeddings stand for the eigenvectors of the biggest eigenvalues.

- Using the function embed from the package dimRed, compute the projections of the samples on a 2-dimensional manifold using isomap. Plot the results colored by true cluster belongings as you did for t-SNE.

?embed## Help on topic 'embed' was found in the following packages:

##

## Package Library

## dimRed /usr/share/miniconda/envs/MAP573/lib/R/library

## stats /usr/share/miniconda/envs/MAP573/lib/R/library

##

##

## Using the first match ...- As you did for t-SNE, compute the silhouette coefficients of the isomap algorithm for a few values of K (number of neighbors considered in the neghborhood graph) coming from 5 to 100. Plot the results and decide on a good value of K for your database.

#TODO : your code hereYour answer here

5) UMAP

Let us have a look at a third and last method: UMAP (Uniform Manifold Approximation and Projection). UMAP assumes that data is uniformly distributed on Riemannian manifolds. It learns a lower-dimensional manifold which retains the topological structure of the Riemannian manifolds of the original data. See this publication for more details.

- Using the function umap from the package umap, compute the projections of the samples on a 2-dimensional manifold using UMAP. You can keep the default hyperparameter values. Plot the results colored by true cluster belongings as you did for t-SNE and Isomap.

?umap

#TODO : Your code here- UMAP takes as argument several hyper-parameters. Among them is the number of neighbors K to consider. This parameter controls how UMAP balances local versus global structure in the data. It does this by constraining the size of the local neighborhood UMAP will look at when attempting to learn the manifold structure of the data. As you did for t-SNE and Isomap, compute the silhouette coefficient of the UMAP algorithm results for a few values of K coming from 5 to 100. Plot the results and decide on a good value for K for your database.

#TODO : Your code hereYour comment here

Note that isomap is a stochastic algorithm, meaning that it does not return exactly the same results when launched twice on the same dataset. You can set a seed in the config parameters to cope with this issue. When choosing the hyperparameters, it is highly recommended to run the algorithm several times and to average the results. (Do not do it for this homework: this will be too long to run).

6) Comparison of computation time

Finally, compute the computation time (using Sys.time() for instance) for each of the 3 methods (t-SNE, Isomap, and UMAP) for different data sizes (from 300 to 1500, each with step 300).

To test different data sizes, you can sample a given number of rows of your dataset with stratification on the true cluster label (this means that you should sample the same number of samples from clusters 1, 2, and 3). Then, plot the computation time with respect to the data size for each of the 3 methods using ggplot. You can use the values of the hyperparameters (perplexity and K) you have found as being the best suited to your data in the last questions. Briefly comment on the results.

#TODO :Your code hereBriefly comment here

Exercise 2

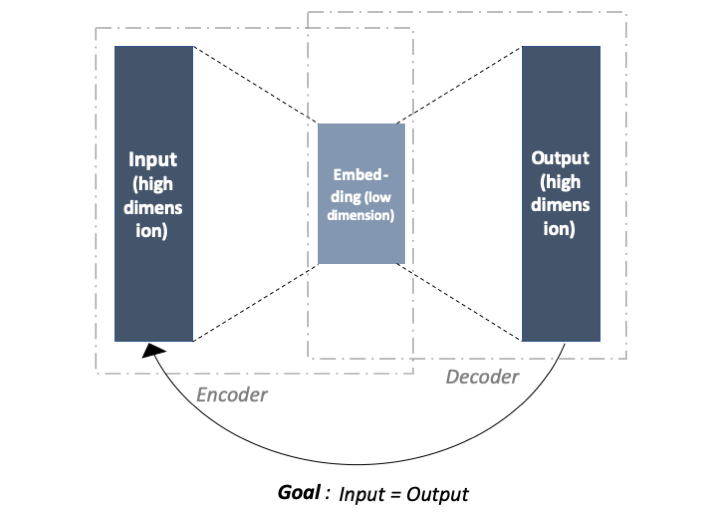

This exercise focuses on Auto-Encoders (AEs) which are a special type of Neural Networks used for dimensionality reduction. Auto-encoders are neural networks trained to learn a copy of the input.

It is composed of two parts: an encoder and a decoder. The input is first encoded by the encoder, then decoded by the decoder. The output of the encoder stands for the data embeddings. The output of the decoder has the same dimension as the input since the ultimate goal of the AE is to copy the input into the output of the decoder. See the figure below for an illustration.

The encoder and the decoder are composed of layers which are the concatenation of linear layers and non-linear activation function. The weights of the linear layers are trained through backpropagation and gradient descent.

You can read more on AutoEncoders here. Indeed, if you are not familiar with neural networks at all, this book written by Goodfellow, Bengio, Courville et al. is the perfect read for you. Note that you do not need to know more about Auto-Encoders and Neural Networks to go through this exercise.

1) Import and plot data

We will work on a sampled version of the MNIST dataset (which you may aleady know from Homework 3): 10,000 labeled handwritten digits from 0 to 9.

Please load the sampled dataset by running the code below. X is the matrix of images. The images are composed of 28x28 pixels in greyscale color, meaning that the value of each pixel varies from 0 (white) to 255 (black). There are 10 labels standing for the digit represented in each image. You should see that the classes are roughly balanced with around 1000 samples per class.

X <- read.csv("data/images_mnist_10K.csv",header = FALSE)

y <- read.csv("data/labels_mnist_10K.csv", header = FALSE)

#dim(X)

#table(y$V1)Write a code (you can reuse your work from Homework 3) to plot the 12 first images of the dataset on a grid (3 rows of 4 images).

#TODO : Your code here2) Define the auto-encoder model

We are going to use Pytorch, which is a Python module, to create an AutoEncoder network and train it in our digits.

We will use the R library “reticulate” to articulate Python and R code. We will typically train the AutoEncoder model in Python and then analyze and plot results in R. You should have already set your environment for reticulate during the “TD”.

Please modify the following code in accordance with your configuration and run it.

reticulate::use_virtualenv("r-reticulate")

reticulate::py_config() #Check your config## python: /usr/share/miniconda/envs/MAP573/bin/python3

## libpython: /usr/share/miniconda/envs/MAP573/lib/libpython3.7m.so

## pythonhome: /usr/share/miniconda/envs/MAP573:/usr/share/miniconda/envs/MAP573

## version: 3.7.8 | packaged by conda-forge | (default, Jul 31 2020, 02:25:08) [GCC 7.5.0]

## numpy: /usr/share/miniconda/envs/MAP573/lib/python3.7/site-packages/numpy

## numpy_version: 1.19.4

## umap: [NOT FOUND]

##

## python versions found:

## /usr/share/miniconda/envs/MAP573/bin/python3

## /usr/bin/python3

## /usr/bin/pythonThen load the python modules we are going to use. Please install them locally before if you have not done it already. You can use “pip install name_module” in your terminal for instance.

import torch

import torch.nn as nn

import torch.optim as optim

import sklearn.model_selection as sk

import numpy as npLet us now define the AutoEncoder model. In Pytorch, a model is defined as a class inheriting from nn.Module(). You should at least define an init function which sets the parameters and the network layers and a forward function which corresponds to a forward pass into the network (encoder + decoder).

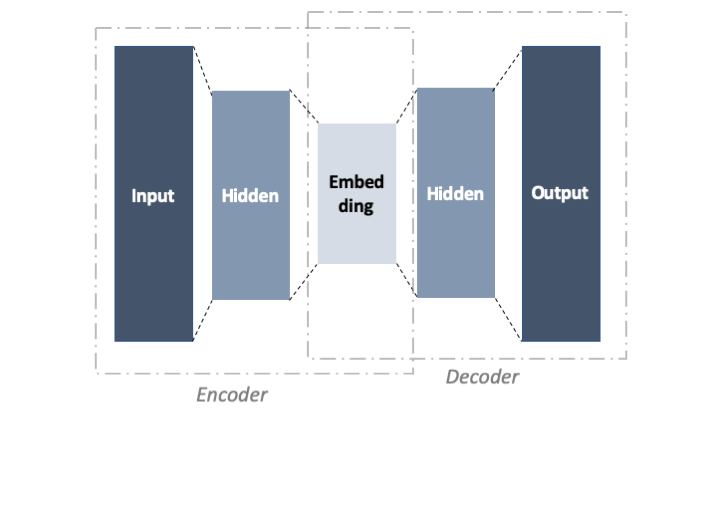

For this exercise, we will stick to an auto-encoder with one hidden layer (see Figure below). It takes three arguments: the input shape, the hiden shape and the embedding shape. Each layer is followed by ReLU which is a common non-linear activation function used for neural networks

Please uncomment and complete the following code by replacing the TODO with either input_shape, hidden_shape or embedding_shape.

# class AE(nn.Module):

#

# def __init__(self, input_shape, hidden_shape, embedding_shape):

#

# super(AE, self).__init__()

#

# self.encoder = nn.Sequential(

# nn.Linear(#TODO,#TODO),

# nn.ReLU(True),

# nn.Linear(#TODO,#TODO),

# nn.ReLU(True))

#

# self.decoder = nn.Sequential(

# nn.Linear(#TODO,#TODO),

# nn.ReLU(True),

# nn.Linear(#TODO,#TODO),

# nn.ReLU(True))

#

# def forward(self,x):

# x = self.encoder(x)

# x = self.decoder(x)

# return xOnce the AutoEncoder class is defined, we should create an object of this class and pass it the correct dimension of layers. Please create an instance of the class AE named model with input_shape = 784, hidden_shape = 350 and embedding_shape = 179.

We chose to work with Adam optimizer and a learning rate of 0.001. Finally, the criterion (the loss function that will be minimized) is Mean Squared Error: we will try to minimize the difference between the input and the reconstructed input.

# Create a model from `Autoencoder` class

#model = #TODO

# Adam optimizer with learning rate 1e-3

# optimizer = optim.Adam(model.parameters(), lr=1e-3)

# Mean-squared error loss

# criterion = nn.MSELoss()Let us now work on the data: the 10,000 digit samples will be split into a train, a validation and a test set. The train set is used to train the network. The validation set enables us to set the hyperparameters for training. The test set is used to assess the model trained on the train set with the set of best hyperparameters found with the validation set. We use the function train_test_split from sklearn to split the train, validation, and test set. This is a two-step procedure: 1) Split test and train + validation and 2) Split train + validation into train and validation. Please uncomment the following code and fill the argument test_size on the chunck below so that: 60% of 10,000 samples are in train set, 20% are in the validation set, 20% are in the test set.

# X_train_val, X_test, y_train_val, y_test = sk.train_test_split(r.X, r.y, test_size= #TODO)

# X_train, X_val, y_train, y_val = sk.train_test_split(X_train_val, y_train_val, test_size= #TODO)

# print(X_train.shape)

# print(X_val.shape)

# print(X_test.shape)The model is trained for a given number of epochs. An epoch corresponds to a complete pass through the training set. The training set is divided into batches of fixed size. We chose here batches of size 128. Pytorch provides a class of iterable object DataLoader to automatically create the batches.

The validation and test set are not split into batches since they are not used for training: we only convert them to Pytorch tensors. Please uncomment and run the code below.

# #Create Dataoader objects with will automaticaly create batches for test and train

# train_loader = torch.utils.data.DataLoader(

# torch.tensor(X_train.to_numpy()).float(),

# batch_size=128,

# shuffle=True

# )

#

# X_val_torch = torch.tensor(X_val.to_numpy()).float()

# X_test_torch = torch.tensor(X_test.to_numpy()).float()3) Train the model

Let us finally train the model. Choose the number of epochs to run (start low and increase it). The following code loops on the number of epochs and on the train batches. The MSE loss is computed for each batch after a forward pass through the network. The function backward then computes the gradient of the loss with respect to the network parameters. Finally, the network parameters are updated through the optimizer (Adam here).

The mean training loss averaged on all the batches of the code is stored in a list to be analyzed afterward. Your task is to compute the MSE loss on the validation set once the training loop on all the batches done. Please uncomment, fill the code below and run it.

Important: the goal of this exercise is to discover Auto-Encoders, Pytorch, and reticulate. Please do not spend time on tuning all the hyperparameters. Plus, do not run too many epochs if your computer does not have much calculus power. To give you an idea, a 100 epochs run in less than 1 minute on my computer.

# epochs = 10

# val_losses = []

# train_losses = []

#

# for epoch in range(epochs):

#

# loss = 0

# for batch_features in train_loader:

#

# # Reset the gradients back to zero

# # PyTorch accumulates gradients on subsequent backward passes

# optimizer.zero_grad()

#

# # Compute reconstructions

# outputs = model(batch_features)

#

# # Compute training reconstruction loss

# train_loss = criterion(outputs, batch_features)

#

# # Compute accumulated gradients

# train_loss.backward()

#

# # Perform parameter update based on current gradients

# optimizer.step()

#

# # Add the mini-batch training loss to epoch loss

# loss += train_loss.item()

#

# # Store the mean training loss on epoch

# loss = loss / len(train_loader)

# train_losses.append(loss)

#

# # Compute loss on validation set

# # TODO : Add your code here

#

# # Store the validation loss on epoch

# val_losses.append(val_loss.item())

#

# # Display the epoch training loss

# print("epoch : {}/{}, train_loss = {:.6f}, val_loss = {:.6f}".format(epoch + 1, epochs, loss,val_loss))Let us come back to R! Plot the evolution of the training and validation loss in function of the epochs. Comment on your result. Can you train your network with more epochs? Should you stop the training process before the last epoch?

Note that one can acces val_losses and train_losses created in the chunck above with py$val_losses and py$train_loss.

#print(py$val_losses)

#print(py$train_losses)

#TODO : Your code hereYour comment here

By analyzing the behavior of the network on the validation set, we can tune all the hyperparameters of the model: number of epochs, learning rate, batch size, layer shapes, … Please do not do it for this homework as this may be too time-consuming. Indeed, let’s keep the parameters defined above and the weights of the model as it was after the last training you did. It does not matter if it is not perfectly trained for the mark.

Let us now focus on assessing the algorithm on the test set. First, compute the reconstructed outputs (forward pass through the auto-encoder) for the test set images. Name your table outputs_test. Print the loss on the test set and comment.

# TODO : Your code hereYour comment here

Next, print the five first original and reconstructed test images by running the code below (1 column for the original digit, 1 column for the reconstructed). Comment on the results.

#TODO : Your code hereYour comment here

4) t-SNE on embeddings

The goal of this last section is to apply t-SNE to the embeddings we get (dimension : 179) in order to visualize them in 2D.

First, compute the embeddings of the test set images (pass through the encoder only).

#TODO : Your code hereNext, apply t-SNE to the embeddings with t-SNE output dimension 2 and the value of perplexity you want (no need to tune the perplexity).

#TODO : Your code hereFinally, print the 2D t-SNE embeddings as points colored by their true label. Comment on the results.

#TODO : Your code hereYour comment here

Through the slides, TD and homework, you should now have a good overview of dimensionality reductions methods (linear and non-linear) that exist. You can have a look at this page which compares several methods and their execution time for the S-curve problem you have seen during the TD. Among them are Isomap and t-SNE that you used during the Homework.